When “The Imitation Game” film debuted in 2014, conversations around artificial intelligence (AI) entered the mainstream. It told the story of Alan Turing, the man recognized as one of the fathers of AI. While AI is considered to be an elusive, new concept to many people, it has been around since the early 1950s, ever since Turing considered the billion-dollar question, “can machines think?”

Boasting over 70 years of research and development, AI is now a vast field of science that has expanded to solve complex problems that previously required extensive manpower and resources.

At SparkCognition, we are dedicated to solving these critical problems by harnessing insights from advanced technologies and delivering world-class AI solutions to businesses on a global scale. However, as much as we value innovation and progress, there is also value in looking back. In order to appreciate where the field of AI is today and where it’s heading tomorrow, we must first acknowledge the journey AI has taken in years past.

Dartmouth summer research project: A catalyst for the future of AI

In 1956, a small group of scientists gathered at Dartmouth College in New Hampshire for the Dartmouth Summer Research Project on Artificial Intelligence. The group of professionals consisted of John McCarthy, an assistant mathematics professor at Dartmouth College, Marvin Lee Minsky, a Harvard Junior Fellow in Mathematics and Neurology, Nathaniel Rochester, the Manager of Information Research at IBM Corporation, and Claude Shannon, an engineer and mathematician at Bell Telephone Laboratories.

McCarthy is recognized as the man who officially coined the term “artificial intelligence,” but each individual played an important role in executing this research, and they were each highly esteemed in their respective fields. This project brought together some of the brightest minds in computing and cognitive science, serving as a catalyst for the field of AI.

Frank Rosenblatt and the Perceptron

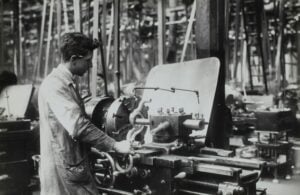

In the year following the Dartmouth Summer Research Project, Frank Rosenblatt, a research psychologist and project engineer at the Cornell Aeronautical Laboratory in New York, demonstrated the basic structure of a neural network: the Perceptron.

Rosenblatt’s electromechanical Perceptron model, now sitting proudly in the Smithsonian, was a simplistic design of modern neural networks. Neural nets are a subcategory of machine learning that reflect the behavior of the human brain using simplistic, interconnected processing nodes that receive and send data to one another. These neural nets learn to perform a specific task by analyzing training samples that serve to improve their accuracy over time, which now allow us to classify large subsets of data at high velocities.

Rosenblatt’s Perceptron model was an important development that shifted what was essentially an academic concept into a physical representation of the neural network, a significant aspect of AI.

The first AI winter

Throughout the 1960s, many important entities, including the U.S. and British governments, were eager to fund more AI research, having been excited for the 20th century’s greatest disruptive innovation. However, with all the success AI has had, the history wouldn’t be complete without mentioning the first major pullback it received (and why).

In November of 1966, the Automatic Language Processing Advisory Committee (ALPAC) published a report concluding that AI had no immediate or future prospects for usefulness or profitability. This convinced the U.S. government to pull back on funding, only granting it to research proposals that contained detailed timelines and deliverables.

This report was closely followed by the Lighthill Report in 1973 that also yielded a negative analysis on the future of AI, specifically how it could be applied to military and governmental purposes. This prompted the British government to cease funding for this field.

However, there is a specific reason why these adverse analyses occurred, why AI was rendered ineffective, and why this assessment no longer persists.

The determination that AI had no potential for future use resulted from factors specific to that time period in the late 1960s and early 1970s. There was a limited amount of data to capture, not enough memory, abysmal processing speeds, and restricted computing power available to seize data and gain insights from it.

In contrast to the late 1900s, there is now a significant amount of data available to use. In fact, by 2025, it is estimated that the datasphere will contain roughly 175 zettabytes–an astronomical difference from the amount of data available in the 1960s and 1970s. The memory capabilities of machines have increased substantially, AI researchers now enjoy rapid processing speeds, and there is significantly more computing power. As the availability and processing capabilities of data continue to grow exponentially, the value of its insights will also expand in kind.

Expert systems

Following the first AI winter, a new era of AI commenced.

There was a major surge in funding in the early- and mid-1980s, increasing from a few million dollars to more than $1B in 1988. Developing commercial products became one of the main goals of this time period, encouraged by the renewed governmental and general interest in AI technology.

One of the most noteworthy applications of AI in this era were expert systems, computer programs that stored large amounts of data and simulated human expert decision-making processes that capitalized on expert knowledge boiled down to “if, then” rules. They became popular and began saving businesses large sums of money as well as promoting the use and research of AI systems.

Where we are now

After one more brief lull in AI development, the field finally boomed.

In 1997, IBM’s Deep Blue chess computer prevailed over Gary Kasparov, the former world chess champion, by using its plethora of computing power. This event demonstrated the “thinking” abilities of a computer and brought AI back into the public’s eye.

Though these functions had been constructed prior to this, by 2010, common applications of AI, such as voice recognition, speaker recognition, and object recognition came into play as millions of people could access this technology through applications on their electronic devices.

Since then, we have witnessed incredible AI developments, such as:

- The Netflix machine learning algorithm used for movie/TV show recommendations

- IBM’s Watson victory over Jeopardy champions

- AlphaGo defeating Lee Sedol, the world’s most impressive Go champion, at his own game

- Apple Inc’s Siri showcasing natural language processing abilities

- Tesla’s self-driving car

- Amazon’s recommendations engine

This short list is merely a sample of the world-class AI capabilities that are growing exponentially every day.

As individuals, we have come to rely on this technology, but there are also fundamental ways in which businesses can and will rely on advanced technology as well, especially as more and more companies realize the value of advanced AI solutions in a competitive marketplace. At SparkCognition, it is our mission to work with industries worldwide to deliver these kinds of AI solutions that drive real-world value, empowering organizations to build a more sustainable, safer, and profitable business.